Intel will reveal the company's 5th Generation Xeon (Emerald Rapids) processors on December 14. However, an Intel presentation titled "Data Centric Processor Roadmap" (via InstLatX64) hosted at the European Southern Observatory has spilled the beans on what Emerald Rapids brings to the server market.

Based on the same Intel 7 node as Sapphire Rapids, Emerald Rapids promises better performance per watt, pushing more cores and L3 cache. Using the Xeon Platinum 8592+ (Emerald Rapids) and the Xeon Platinum 8480+ (Sapphire Rapids) for comparison, Intel claims that the former provides up to 1.2X higher web (server-side java) performance, 1.3X higher HPC (LAMMPS- Copper) performance, and 1.2X higher media (transcode FFMPEG) performance. The chipmaker's performance figures seem credible since Emerald Rapids wields the faster Raptor Cove cores than Sapphire Rapids, which uses Golden Cove cores. Moreover, the upcoming Xeon Platinum 8592+ has eight more cores than the existing Xeon Platinum 8480+.

Given the recent AI boom, Intel didn't forget to highlight Emerald Rapids' substantial performance gains in AI thanks to the company's built-in AI accelerators and Intel Advanced Matrix Extensions (Intel AMX). Intel's projecting uplifts between 1.3X to 2.4X, depending on the workload.

Emerald Rapids will also have higher native DDR5 support and an enhanced Ultra Path Interconnect (UPI). While Intel didn't go into details, we expect Emerald Rapids to embrace DDR5-5600, up from the DDR5-4800 on Sapphire Rapids. Meanwhile, Intel has likely upgraded the UPI speed from 16 GT/s to 20 GT/s on Emerald Rapids. Intel confirmed that Emerald Rapids will continue to provide 80 PCIe 5.0 lanes for expansion but appears to have added Compute Express Link (CXL) bifurcation, according to the slide deck.

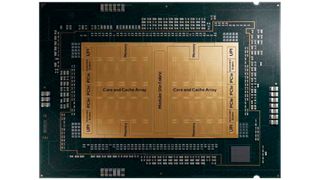

Even more interesting, though, is that Intel provided a die shot of Emeralds Rapid. The floorplan shows a two-die design instead of the four-die design on Sapphire Rapids. Nonetheless, Emerald Rapid's overall area is rumored to be smaller than Sapphire Rapids, which may result from Intel's rework on the layout to optimize space. Downsizing helps improve latency. The two big dies are connected through a modular die fabric.

Intel hasn't confirmed the core count on each Emerald Rapids die, but the current speculation is that each die houses 33 cores with one disabled core. Therefore, the highest Emerald Rapids chip, which appears to be the Xeon Platinum 8592+, maxes out at 64 cores instead of 66. The increase in L3 cache is Emerald Rapids' most attractive selling point. Intel increased the L3 cache from 1.875MB per core on Sapphire Rapids to 5MB per core on Emerald Rapids, equivalent to a 2.6X upgrade. As a result, the top 64-core chip will have a whopping 320MB of L3 cache.

Emerald Rapids' blueprint shows two memory controllers per die, so that's four in total. Each controller should manage two memory channels, enabling Emerald Rapids to retain eight-channel memory support, the same as Sapphire Rapids. Meanwhile, the diagram also shows three PCIe controllers, two UPI, and two accelerator engines per die.

Intel 5th Gen Xeon Emerald Rapids Specifications*

| Processor | Cores | Threads | Base Clock (GHz) | L3 Cache (MB) |

|---|---|---|---|---|

| Xeon Platinum 8593Q | 64 | 128 | 2.20 | 320 |

| Xeon Platinum 8592 | 64 | 128 | 1.90 | 320 |

| Xeon Platinum 8592V | 64 | 128 | 2.00 | 320 |

| Xeon Platinum 8581V | 60 | 120 | 2.00 | 300 |

| Xeon Platinum 8580 | 60 | 120 | 2.00 | 300 |

| Xeon Platinum 8571N | 60 | 120 | 2.40 | 300 |

| Xeon Platinum 8570 | 60 | 120 | 2.10 | 300 |

| Xeon Platinum 8568Y | 60 | 120 | 2.30 | 300 |

| Xeon Platinum 8562Y | 12 | 24 | 2.80 | 60 |

| Xeon Platinum 8558 | 52 | 104 | 2.10 | 260 |

| Xeon Platinum 8558P | 52 | 104 | 2.70 | 260 |

| Xeon Platinum 8558U | 52 | 104 | 2.00 | 260 |

| Xeon Gold 6558Q | 12 | 24 | 3.20 | 60 |

| Xeon Gold 6554S | 36 | 72 | 2.20 | 180 |

| Xeon Gold 6548Y | 12 | 24 | 2.50 | 60 |

| Xeon Gold 6548N | 12 | 24 | 2.80 | 60 |

| Xeon Gold 6544Y | ? | ? | 3.60 | 45 |

| Xeon Gold 6542Y | 12 | 24 | 2.90 | 60 |

| Xeon Gold 6538Y | 12 | 24 | 2.20 | 60 |

| Xeon Gold 6538N | 12 | 24 | 2.10 | 60 |

| Xeon Gold 6534 | ? | ? | 3.90 | 22.5 |

| Xeon Gold 6530 | 32 | 64 | 2.10 | 160 |

| Xeon Gold 6526Y | ? | ? | 2.80 | 37.5 |

| Xeon Gold 5520 | ? | ? | 2.20 | 52.5 |

| Xeon Gold 5515 | ? | ? | 3.20 | 22.5 |

| Xeon Gold 5512U | ? | ' | 2.10 | 52.5 |

| Xeon Silver 4516Y | ? | ? | 2.20 | 45 |

| Xeon Silver 4514Y | 6 | 12 | 2.00 | 30 |

*Specifications are unconfirmed.

Hardware detective momomo_us has shared a list of the alleged Emerald Rapids processors that Intel will unleash on the server market. The Xeon Platinum 8593Q appears to feature the same 64-core, 128-thread configuration as the Xeon Platinum 8592+, which Intel used for comparison. However, the former has a 100 MHz higher base clock, and the "Q" suffix denotes that it's an SKU with a lower Tcase tailored for liquid cooling. Meanwhile, the Xeon Platinum 8592V is a variant of the Xeon Platinum 8592+ that is better optimized for SaaS cloud environments.

Intel has prepared a thorough stack of Emerald Rapids to compete at every price bracket. The Xeon Platinum models range from 12 to 64 cores; meanwhile, the Xeon Gold spans between 12 and 36 cores. Lastly, the entry-level Xeon Silver SKUs have low core counts starting from six cores.

Emerald Rapids is drop-in compatible with Intel's current Eagle Stream platform with the LGA4677 socket, thus improving the cost of ownership (TCO) and minimizing the server downtime for upgrades. Furthermore, the new 10nm chips will allow Intel's customers to refresh their server products to continue competing with AMD's EPYC, specifically the 5th Generation EPYC Turin, which will arrive before the end of the year.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Zhiye Liu is a Freelance News Writer at Tom’s Hardware US. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

hotaru251 somehow don't think 1.4x over its old model is gonna beat epyc as iirc wasnt epyc nearly 2x as fast?Reply -

bit_user Reply

It's not a big enough increase to overcome 96-core EPYC on general-purpose cloud workloads. However, it's probably enough to score wins in more niches, particularly in cases where the accelerators come into play.hotaru251 said:somehow don't think 1.4x over its old model is gonna beat epyc as iirc wasnt epyc nearly 2x as fast?

The big question I have is whether Intel is going to be as parsimonious with the accelerators as they were in the previous gen.

I recall how they restricted AVX-512 to single-FMA for lower-end Xeons, in Skylake SP + Cascade Lake, but then in Ice Lake, they were at such an overall deficit against EPYC that they just let everyone (Xeons, I mean) have 2 FMAs per core.

So, I could see Intel further trying to overcome its core-count & memory channel deficit by enabling more accelerators in more SKUs. Or will they still try to overplay this hand? They can always tighten the screws again, in Granite Rapids. -

bit_user ReplyThe chipmaker's performance figures seem credible since Emerald Rapids wields the faster Raptor Cove cores than Sapphire Rapids, which uses Golden Cove cores.

The P-cores in Alder Lake vs. Raptor Lake only changed in the amount of L2 cache. The server cores usually have more L2 cache than the client versions, anyhow. So, I also have to question how meaningful that distinction is, in this context.

substantial performance gains in AI thanks to the company's built-in AI accelerators and Intel Advanced Matrix Extensions (Intel AMX). Intel's projecting uplifts between 1.3X to 2.4X, depending on the workload.

I'm going to speculate the upper end of that improvement is thanks mainly to the increased L3 capacity.

Downsizing helps improve latency.

Not sure about that. I think it's mainly a cost-saving exercise. The main way you reduce latency, at that scale, is by improving the interconnect topology or optimizing other aspects of how it works.

Intel hasn't confirmed the core count on each Emerald Rapids die, but the current speculation is that each die houses 33 cores with one disabled core.

I read that each die has 35 cores and at least 3 are disabled.

Emerald Rapids is drop-in compatible with Intel's current Eagle Stream platform with the LGA4677 socket, thus improving the cost of ownership (TCO) and minimizing the server downtime for upgrades.

As with the consumer CPUs, it's standard practice for Intel to retain the same server CPU socket across 2 generations. I'm sure it's mainly for the benefit of their partners and ecosystem, rather than to enable drop-in CPU upgrades. Probably 99%+ of their Xeon customers don't do CPU swaps, but rather just wait until the end of the normal upgrade cycle and do a wholesale system replacement. -

DavidC1 Reply

No, it's precisely due to performance. They are going from 1510mm2 using 4 dies to 1493mm2 using 2 dies, so the latter requires quite a bit more wafers. If it was about cost, they wouldn't have done so.bit_user said:I'm going to speculate the upper end of that improvement is thanks mainly to the increased L3 capacity.

Not sure about that. I think it's mainly a cost-saving exercise. The main way you reduce latency, at that scale, is by improving the interconnect topology or optimizing other aspects of how it works.

EMIB chiplets go down significantly and there's less hop needed as it only needs to traverse between two tiles, rather than four. -

bit_user Reply

I assume yield is the reason they have 3 spare cores per die (assuming that info I found is correct).DavidC1 said:They are going from 1510mm2 using 4 dies to 1493mm2 using 2 dies, so the latter requires quite a bit more wafers. If it was about cost, they wouldn't have done so.

Okay, then what's the latency impact of an EMIB hop?DavidC1 said:EMIB chiplets go down significantly and there's less hop needed as it only needs to traverse between two tiles, rather than four. -

DavidC1 Reply

Go read about Semianalysis's article on EMR and come back.bit_user said:I assume yield is the reason they have 3 spare cores per die (assuming that info I found is correct).

Okay, then what's the latency impact of an EMIB hop?

They aren't saving anything here, and there would be no reason to as they are barely making a profit and aiming for lower material cost over performance is a losing battle as increased performance can improve positioning does profits.

Hence why things like 3x increased in L3 cache capacity happened.

Considering small core count increase and basically same core, up to 40% is very respectable and come from lower level changes. -

Metal Messiah. Replybit_user said:I read that each die has 35 cores and at least 3 are disabled.

Nope, that data which some other tech sites have outlned seems inaccurate. I presume there are two 33-core dies in Emerald Rapids lineup. So it means they have disabled only 1 core per die, to give a 64-core top-end SKU.

-

bit_user Reply

Will do, though you are allowed to post (relevant) links...DavidC1 said:Go read about Semianalysis's article on EMR and come back.

The way I see 2 dies vs. 4 affecting performance is actually by reducing power consumption. Making the interconnect more energy-efficient, by eliminating cross-die links gives you more power budget for doing useful computation.DavidC1 said:They aren't saving anything here, and there would be no reason to as they are barely making a profit and aiming for lower material cost over performance is a losing battle as increased performance can improve positioning does profits.

Let's not forget the original point of contention, which was that smaller dies = lower latency. If you have evidence to support that, I'd like to see it.

The benefits of more L3 cache have been extensively demonstrated by AMD. Couple that with better energy-efficiency from Intel 7+ manufacturing node and fewer cross-die links + a couple more cores, and the performance figures sound plausible to me.DavidC1 said:Hence why things like 3x increased in L3 cache capacity happened.

Considering small core count increase and basically same core, up to 40% is very respectable and come from lower level changes. -

George³ I'm having trouble calculating the aggregate CPU to RAM communication speed for Emerald Rapids? What is the total width of the bus, 256 or 512 bits?Reply -

DavidC1 Reply

It's 8-channels so 512-bits.George³ said:I'm having trouble calculating the aggregate CPU to RAM communication speed for Emerald Rapids? What is the total width of the bus, 256 or 512 bits?

DDR5 has internal 32-bit channels but in practice it doesn't matter since DIMMs are always 64-bits. Emerald Rapids is also a drop in socket replacement to Sapphire Rapids, which is also 8-channel.

@bit_user Actually I never said it reduced latency due to being a smaller die. It's the reduced travel and hops needed due to having only two dies is what improves latency.

If Intel wanted to focus on reducing cost, they wouldn't have increased L3 cache so drastically.

Most Popular

By Zhiye Liu

By Mark Tyson

By Mark Tyson